The most profound shift in photography since the transition from film to digital is happening now—and most people don’t realize it yet.

That shift is computational photography, which refers to processing images, often as they’re captured, in a way that goes beyond simply recording the light that hits a camera’s image sensor. The terms machine learning (ML) and artificial intelligence (AI) also get bandied about when we’re talking about this broad spectrum of technologies, inevitably leading to confusion.

Does the camera do everything automatically now? Is there a place for photographers who want to shoot manually? Do cloud networks know everything about us from our photos? Have we lost control of our photos?

It’s easy to think “AI” and envision dystopian robots armed with smartphones elbowing us aside. Well, maybe it’s easy for me, because now that particular mini-movie is playing in a loop in my head. But over the past few years, I’ve fielded legitimate concerns from photographers who are anxious about the push toward incorporating AI and ML into the field of photography.

So let’s explore this fascinating space we’re in. In this twice-monthly column, I’ll share how these technologies are changing photography and how we can understand and take advantage of them. Just as you can take better photos when you grasp the relationships between aperture, shutter speed, and ISO, knowing how computational photography affects the way you shoot and edit is bound to improve your photography in general.

For now, I want to talk about just what computational photography is in general, and how it’s already impacting how we take photos, from capture to organization to editing.

Let’s Get the Terminology Out of the Way

Computational photography is an umbrella term that essentially means, “a microprocessor and software did extra work to create this image.”

True researchers of artificial intelligence may bristle at how the term AI has been adopted broadly, because we’re not talking about machines that can think for themselves. And yet, “AI” is used most often because it’s short and people have a general idea, based on fiction and cinema over the years, that it refers to some machine independence. Plus, it works really well in promotional materials. There’s a tendency to throw “AI” onto a product or feature name to make it sound cool and edgy, just as we used to add “cyber” to everything vaguely hinting at the internet.

A more accurate term is machine learning, ML, which is how much of the computational photography technologies are built. In ML, a software program is fed thousands or millions of examples of data—in our case, images—as the building blocks to “learn” information. For example, some apps can identify that a landscape photo you shot contains a sky, some trees, and a pickup truck. The software has ingested images that contain those objects and are identified as such. So when a photo contains a green, vertical, roughly triangular shape with appendages that resemble leaves, it’s identified as a tree.

Another term that you may run into is high dynamic range, HDR, which is achieved by blending several different exposures of the same scene, creating a result where a bright sky and a dark foreground are balanced in a way that a single shot couldn’t capture. In the early days of HDR, the photographer combined the exposures, resulting in garish, oversaturated images where every detail was illuminated and exaggerated. Now, that same approach happens in smartphone cameras automatically during capture, with much more finesse to create images that look more like what your eyes—which have a much higher dynamic range than a camera’s sensor—perceived at the time.

Smarter Capture

Perhaps the best example of computational photography is in your pocket or bag: the mobile phone, the ultimate point-and-shoot camera. It doesn’t feel disruptive, because the process of grabbing a shot on your phone is straightforward. You open the camera app, compose a picture on-screen, and tap the shutter button to capture the image.

Behind the scenes, though, your phone runs through millions of operations to get that photo: evaluating the exposure, identifying objects in the scene, capturing multiple exposures in a fraction of a second, and blending them together to create the photo displayed moments later.

In a very real sense, the photo you just captured is manufactured, a combination of exposures and algorithms that make judgments based not only on the lighting in the scene, but the preferences of the developers regarding how dark or light the scene should be rendered. It’s a far cry from removing a cap and exposing a strip of film with the light that comes through the lens.

But let’s take a step back and get pedantic for a moment. Digital photography, even using early digital cameras, is itself computational photography. The camera sensor records the light, but then it applies an algorithm to turn that digital information into colored pixels, and then typically compresses the image into a JPEG file that is optimized to look good while also keeping the file size small.

Traditional camera manufacturers like Canon, Nikon, and Sony have been slow to incorporate the types of computational photography technologies found in smartphones, for practical and no doubt institutional reasons. But they also haven’t been sitting idle. The bird eye tracking feature in the Sony Alpha 1, for example, uses subject recognition to identify birds in the frame in real-time.

Smarter Organization

For quite a while, apps such as Adobe Lightroom and Apple Photos have been able to identify faces in photos, making it easier to bring up all the images containing a specific person. Machine learning now enables software to recognize all sorts of objects, which can save you the trouble of entering keywords—a task that photographers seem quite reluctant to do. You can enter a search term and bring up matches without having touched the metadata in any of those photos.

If you think applying keywords is drudgery, what about culling a few thousand shots into a more manageable number of images that are actually good? Software such as Optyx can analyze all the images, flag the ones that are out of focus or severely underexposed, and mark those for removal. Photos with good exposure and sharp focus get elevated so you can end up evaluating several dozen, saving a lot of time.

Smarter Editing

The post-capture stage has seen a lot of ML innovation in recent years as developers add smarter features for editing photos. For instance, the Auto feature in Lightroom, which applies several adjustments based on what the image needs, improved dramatically when it started referencing Adobe’s Sensei cloud-based ML technology. Again, the software recognizes objects and scenes in the photo, compares it to similar images in its dataset, and makes better-informed choices in how to adjust the shot.

As another example, ML features can create complex selections in a few seconds, compared to the time it would take to hand-draw a selection using traditional tools. Skylum’s Luminar AI identifies objects, such as a person’s face, when it opens a photo. Using the Structure AI tool, you can add contrast to a scene and know that the effect won’t be applied to the person (which would be terribly unflattering). Or, in Lightroom, Lightroom Classic, Photoshop, and Photoshop Elements, the Select Subject feature makes an editable selection around the prominent thing in the photo, including a person’s hair, which is difficult to do manually.

Most ML features are designed to relieve pain points that otherwise take up valuable editing time, but some are able to simply do a better job than previous approaches. If your image was shot at a high ISO under dark lighting, it probably contains a lot of digital noise. De-noising tools have been available for some time, but they usually risk turning the photo into a collection of colorful smears. Now, apps such as ON1 Photo RAW and Topaz DeNoise AI use ML technology to remove noise and retain detail.

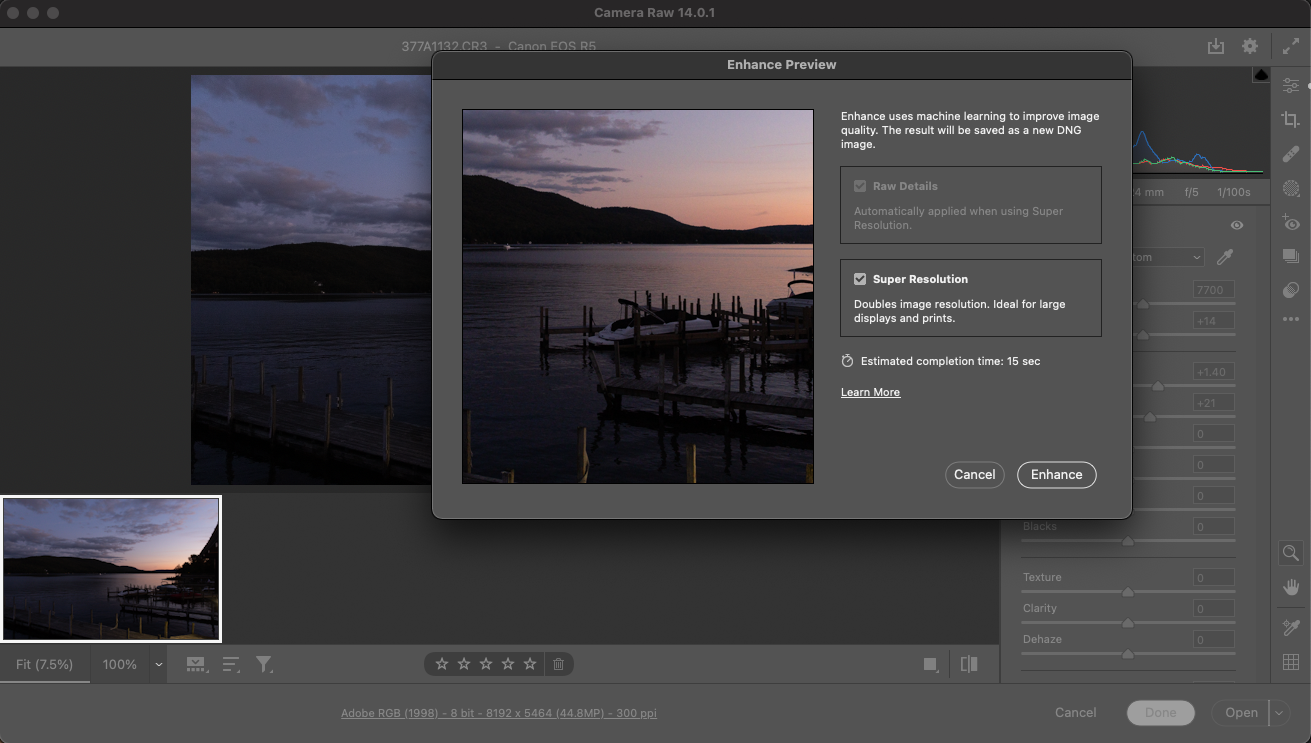

And for my last example, I want to point to the capability to enlarge low-resolution images. Upsizing a digital photo carried the risk of softening the image quality because you’re often just making existing pixels larger. Now, ML-based resizing features, such as Pixelmator Pro’s ML Super Resolution or Photoshop’s Super Resolution, can increase the resolution of a shot, while smartly keeping sharp in-focus areas crisp.

The ‘Smarter Image’

I’m skimming quickly over the possibilities to give you a rough idea of how machine learning and computational photography are already affecting photographers. In upcoming columns, I’m going to be looking at these and other features in more depth. And along the way, I’ll cover news and interesting developments in this field that is rapidly growing. You can’t swing a dead Pentax without hitting something that’s added “AI” to its name or marketing materials these days.

And what about me, your smart(-ish/-aleck) columnist? I’ve written about technology and creative arts professionally for over 25 years, including several photography-specific books published by Pearson Education and Rocky Nook, and hundreds of articles for outlets such as DPReview, CreativePro, and The Seattle Times. I co-host two podcasts, lead photo workshops in the Pacific Northwest, and drink a lot of coffee.

It’s an exciting time to be a photographer. Unless you’re a dystopian robot, in which case you’re probably tired of elbowing photographers.

About the author

Jeff Carlson (@jeffcarlson, [email protected]) is the author of The Photographer’s Guide to Luminar AI, Take Control of Your Digital Photos, and Take Control of Your Digital Storage, among many other books and articles. He co-hosts the podcastsPhotoActive and Photocombobulate. You can see more of Jeff’s writing and photography at Jeffcarlson.com.

The post Computational photography explained – the next age of photography is already here appeared first on Popular Photography.